I managed to muddle my way through the free Internet version of the Stanford Introduction to Artificial Intelligence (AI) course. "Congratulations! You have successfully completed the Advanced Track of Introduction to Artificial Intelligence ..." says my Statement of Accomplishment.

Before putting on my analyst mask, I would like to thank Stanford University and particularly the instructors, Sebastian Thrun and Peter Norvig, for conducting the course. In particular, for making it free of charge. I am hoping they will leave the instruction videos up for a while, there are some I would like to go over again.

I got a good review of Bayes Rule, basic probability, and some simple machine learning algorithms. I had not worked on Planning algorithms before, so that was of some interest. Markov Models had always been a bit vague to me, so that section helped me nail down the idea. Games and game theory seem to have made little progress since the 1940's, but I guess they have to be covered, and I did get clear on how MinMax works. Computer vision seemed kind of weak, but then you can't assume students know basic optics and at least we learned how to recognize simple features. Robotics was a prior interest for me, and I did not know about Thrun's obvious favorite, Particle Filters, which are a useful paradigm for spatial positioning (aka localization).

The last two units were on Natural Language Processing, and that is a good place to start a critique (keeping in mind that all this material was introductory). Apparently you can do a lot of tricks processing language, both in the form of sounds/speech and written text, without the algorithms understanding anything. They showed a way to do pretty decent inter-ethnic language translations, but the usefulness depends on humans being able to understand at least one language.

Plenty of humans do plenty of things, including paid work, without understanding what they are doing. I suppose that could be called a form of artificial intelligence. Pay them and feed them and they'll keep up those activities. But when people do things without understanding (I am pretty sure some of my math teachers fell into that category), danger lurks.

The Google Car that drives itself around San Francisco (just like Science Fiction!) just demonstrates that driving a Porsche proves little about your intelligence capabilities. Robot auto-driving was a difficult problem for human engineers to solve. They were able to solve it because they understood a whole lotta stuff. Particle Filters, which involve probability techniques combined with sensory feedback to map and navigate an environment, are a cool part of the solution. If I say "I understand now: I have been walking through a structure, and to get to the kitchen I just turn left at the Picasso reproduction," I may be using the word understand in a way that compares well with what we call the AI capabilities of the Google Car. Still, I don't think the Car meets my criteria for machine understanding. The car might even translate from French to English for its human cargo, but I still classify it as dumb as a brick.

Hurray! Despite my advancing age, lack of a PhD., less than brilliant business model, and tendency to be interested in too many different things to be successful in this age of specialization, no one seems to have gotten to the essence of how humans understand, and are aware of, the world and themselves.

If the human brain, or its neural network subcomponents, did Particle Filters, how would that work? I know from much practice that bumping around in the dark can lead to orientation or disorientation, depending on circumstances. On the other hand the random micro-fine movements of the eye might be a physical way of generating randomness to test micro-hypotheses that we are not normally consciously aware of.

We sometimes say (hear Wittgenstein in my voice) that someone has a shallow understanding of something. "Smart enough to add columns of numbers, not smart enough to do accounting," or "Good at game basics, but unable to make strategic decisions." Let me put it another way: in some ways the course itself was an intelligence test. I imagine it would be very rough for anyone without a background in algebra and basic probability theory. The students in the class already knew a lot, and had to learn difficult things.

I want to know how our bodies, our brains, learn difficult things. The only way I will be able to be sure that I understand how that is done is if I can build a machine that can do the same thing.

Monday, December 26, 2011

Wednesday, November 16, 2011

stuck

Maybe we need the equivalent of a quantum hypothesis for the brain, in the sense that it may not be possible to construct a corrrect model based on classic principles, no matter how complex.

Monday, October 24, 2011

Stanford AI Class thoughts, and a brief tour of AI history

"people who face a difficult question often answer an easier one instead, without realizing it"

— Daniel Kahneman,

— Daniel Kahneman,

Despite time management issues (which will only get worse this week) I managed to struggle through the first two weeks, or four units, of the online Stanford introduction to artificial intelligence course.

In the past I had already tried to struggle through Judea Pearl's Probabilistic Reasoning in Intelligent Systems. That was published well over 20 years ago, and yet this course uses many of the same examples. The course is much more about working out actual examples; it is practical, not so theoretical. We've covered Bayes networks and conditional probability, both concepts I had already learned because Numenta was using them. Pearl's book contains a lot of material about wrong directions to take; the Stanford course seems to be focussed on what actually works, at least for Google.

My impression is still that the Stanford AI paradigm, while very practical, is not going to provide the core methods for truly intelligent machines, which I characterize as machine understanding. I think this largely because I am ancient and have watched quite a few AI paradigms come and go over the decades.

When AI got started, let's say in the 1950's, there was an obsession with logic as the highest form of human intelligence (often equated with reasoning). That computers operated with logic circuits seemed a natural match. Math guys who were good at logic tended to deride other human activities as less difficult and requiring less intelligence. Certain problems, including games with limited event spaces (like checkers), could be solved more rapidly by computers (once a human had written an appropriate program) than by humans. By the sixties, at latest by the seventies, computers running AI programs would be smarter than humans. In retrospect, this was idiotic, but the brightest minds of those times believed it.

One paradigm that showed some utility was expert systems. To create one of these, experts (typical example: a doctor making a diagnosis) were consulted to find out how they made decisions. Then a flow chart was created to allow a computer program to make a decision in a similar manner. As a result a computer might appear intelligent, especially if provided by the then more difficult trick of an audio imitation voice output, but today no one would call such a system intelligent. That is no more intelligent than the early punch card sorters that once served as input and output for computers that ran on vacuum tubes.

In the 1980's there was a big push in artificial neural networks. This actually was a major step towards machines being able to imitate human brain functions. It is not a defunct field. Some practical devices today work with technologies developed in that era. But scaling, the problems grew faster than the solutions. No one could build an artificial human brain out of the old artificial neural networks. We know that if we can exactly model a human brain, down to the molecular (maybe even atomic) level, we should get true artificial intelligence. But simplistic systems of neurons and synapses are not easy to assemble into a funcioning human brain analog.

The Stanford model for AI has been widely applied to real world problems, with considerable success. This probabilistic model allows it to deal with more complex data than the old logic and expert system paradigms ever could. Machine systems really can learn new things about their environment and store and organize that information in a way that allows for practical decision making. Clearly that is one thing human brains can do, and it is a lot more difficult than playing in a set-piece world like tic-tac-toe or even chess.

Sad as the state of human reasoning can be at times, and as slow as we are to learn new lessons, and as proud as we are of our least bouts of creativity, (and as much as we may occasionally ignore the rule against run-on sentences), I think the Stanford model is not, by itself, going to lead to machine understanding. The human brain has a lot of very interesting structures at the gross level and at the synaptic level. Neurologists have not yet deciphered them. Their initial "programming," or hard-wiring is purely the result of human evolution.

When is imitated intelligence real intelligent? When does a machine (or a human, for that matter) understand something, as opposed to merely changing internal memory to reflect the external reality?

Then again, maybe a Stanford model computer/program/input/output system would have done better at the Stanford AI course than I have. I certainly have not been getting all the quizzes and homework problems right on the first try. On the other hand, I think it will be some good long time before a machine can read, say, a book on neurology and carry on an extended intelligent conversation about it.

Thursday, September 22, 2011

Brain Modeling Computational Trajectory

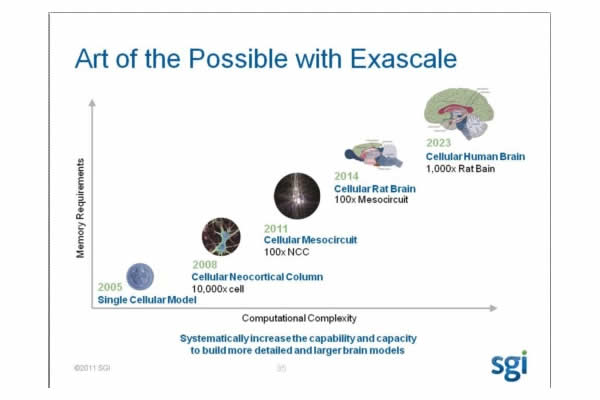

SGI is a manufacturer of high-performance computers, what might be called small supercomputers. I was listening to their analyst day (I own stock in SGI) this morning and saw an interesting slide, which I reproduce here:

With regard to machine understanding, this is the direct assault method. At some point when the human brain is modeled in sufficient detail the construct should display human memory, intelligence, and even consciousness or self-awareness. It is conceivable that a detailed computer model might exhibit artificial intelligence or understanding but leave us still unable to comprehend the essence of what is happening. More likely we will be enlightened and therefore able to construct working machine entities that have true intelligence and understanding, but are not exactly modeled on the human nervous system.

There are different levels for modeling biological systems of neurons and brain matter. We might model on the atomic, molecular, sub-cellular, cellular, or neural-functional level. It is not clear what level of detail is assumed in the SGI projection. Best guess is that is has a model for individual neurons, but the complexity is added by adding additional neurons to the network. That would be the main difference between modeling a "cellular neocortical column," a mesocircuit, a rat brain, and a human brain.

SGI already makes computers for researchers in this field. Of course other vendors' computers can be used. The advantage SGI brings to the table today is the ability to build a large model in computer memory chips (the processors see a big, unified memory), as opposed to hard disks.

I would be very impressed if someone could correctly model a functioning rat brain by 2014. Keep in mind that just because the computer power is available to do it, does not mean that any given team's model is correct. I wonder what proof on concept would consist of? To test such a brain you would need a test environment. That might be a simulation, but it could also be robotic. Keep in mind that much of what a rat brain does relates not to what we think of as awareness of the environment, but to maintaining body functions.

Another issue is the initial state problem. Suppose that you "dissect" a rat so that you know the relative placement of every neuron in its central nervous system. Still, to make your model work, you would need a functional initial state for all the the cells. You need to know how synapses are weighted. Probably someone is working on the boot-up of mammal brains during embryonic development. Even just getting the genetically re-programmed neurotransmitter types for each synapse to each cell seems like a more difficult problem that making a generalized computer model based on a neural map plus a generalized neuron.

Apparently there is plenty of work on this project for everyone.

With regard to machine understanding, this is the direct assault method. At some point when the human brain is modeled in sufficient detail the construct should display human memory, intelligence, and even consciousness or self-awareness. It is conceivable that a detailed computer model might exhibit artificial intelligence or understanding but leave us still unable to comprehend the essence of what is happening. More likely we will be enlightened and therefore able to construct working machine entities that have true intelligence and understanding, but are not exactly modeled on the human nervous system.

There are different levels for modeling biological systems of neurons and brain matter. We might model on the atomic, molecular, sub-cellular, cellular, or neural-functional level. It is not clear what level of detail is assumed in the SGI projection. Best guess is that is has a model for individual neurons, but the complexity is added by adding additional neurons to the network. That would be the main difference between modeling a "cellular neocortical column," a mesocircuit, a rat brain, and a human brain.

SGI already makes computers for researchers in this field. Of course other vendors' computers can be used. The advantage SGI brings to the table today is the ability to build a large model in computer memory chips (the processors see a big, unified memory), as opposed to hard disks.

I would be very impressed if someone could correctly model a functioning rat brain by 2014. Keep in mind that just because the computer power is available to do it, does not mean that any given team's model is correct. I wonder what proof on concept would consist of? To test such a brain you would need a test environment. That might be a simulation, but it could also be robotic. Keep in mind that much of what a rat brain does relates not to what we think of as awareness of the environment, but to maintaining body functions.

Another issue is the initial state problem. Suppose that you "dissect" a rat so that you know the relative placement of every neuron in its central nervous system. Still, to make your model work, you would need a functional initial state for all the the cells. You need to know how synapses are weighted. Probably someone is working on the boot-up of mammal brains during embryonic development. Even just getting the genetically re-programmed neurotransmitter types for each synapse to each cell seems like a more difficult problem that making a generalized computer model based on a neural map plus a generalized neuron.

Apparently there is plenty of work on this project for everyone.

Tuesday, August 16, 2011

Stanford AI course

I signed up for the free Stanford course, An Introduction to Artificial Intelligence to be taught by Sebastian Thrun and Peter Norvig this fall. I figure it can't hurt, and free is a good price.

Thursday, July 21, 2011

Naive Bayes Classifier in Python

I have been busy with many things: indexing a book on software management; trying to learn math things I would have learned when I was 18 or 19 if the Vietnam War had not made me decide to major in Political Science; advising investors about the value of Hansen Medical's robotics technology and Iteris's visual analysis software for cars and highway monitoring.

So even though I continue to think about MU, and to study, I have had nothing in particular worth reporting here. However, I came across a good introductory page on using Bayes probability with the Python programming language. If that is what you need, here it is:

Text Categorization and Classification in Python with Bayes' Theorem

And I would love to play with a Kinect, but where would the time come from?

So even though I continue to think about MU, and to study, I have had nothing in particular worth reporting here. However, I came across a good introductory page on using Bayes probability with the Python programming language. If that is what you need, here it is:

Text Categorization and Classification in Python with Bayes' Theorem

And I would love to play with a Kinect, but where would the time come from?

Labels:

Bayes,

categorization,

classification,

machine understanding,

mu,

Python,

robots

Monday, April 18, 2011

Handling Complex Numbers

I have not been logging my MU activity very well. I am trying to develop a simple system to test my ideas. The first one bogged down and went nowhere. At the same time looking at a lot of possibly useful math, including Hilbert spaces.

Today was thinking about neural network handling of complex numbers. Decided to let others do my thinking for me and came upon this paper after a search. Reminds me that I think all science journals should be published on the net with no charge to read; that would accelerate the advances we all desire.

Enhanced Artificial Neural Networks Using Complex Numbers by Howard E. Michel and A. S. S. Awwal. I don't know if I can use their specific model, but it is good food for thought.

Tuesday, March 1, 2011

gradient covariance

Thinking, thinking, thinking. Trying to work towards an example.

Spent more time than I meant making sure I understand how gradients act, their covariant nature under coordinate changes. Wrote out a "simple" example, just a 2D transformation of the gradient of a simple function. But it still took awhile. I made an arithmetic, an algebra, and a calculus mistake the first time through; am I ever rusty!

If you are looking for an example of covariance of a tensor or vector, try it:

gradient covariance

Now to make neuron-like structures do the math for me ...

Spent more time than I meant making sure I understand how gradients act, their covariant nature under coordinate changes. Wrote out a "simple" example, just a 2D transformation of the gradient of a simple function. But it still took awhile. I made an arithmetic, an algebra, and a calculus mistake the first time through; am I ever rusty!

If you are looking for an example of covariance of a tensor or vector, try it:

gradient covariance

Now to make neuron-like structures do the math for me ...

Labels:

coordinate system,

covariance,

gradient,

tensors,

transformations,

vectors

Saturday, January 22, 2011

bogged down

Earlier this week I thought I was on the verge of a breakthrough, but instead I sank into the bog of mathematics, analytic issues, and philosophical delusions.

There is a tendency to think of the identification of an object, say a dog or better still, a specific dog, as coinciding in the brain with the firing of a specific neuron, or perhaps a set of neurons. That might in turn fire a pre-verbal response that one could be conscious of, then the actual verbal response, whether as a thought or as speach: "Hugo," my dog.

Some would make this a paradigm for invariance. Hugo can change in position, wear a sweater, age or even die, but the Hugo object is invariant.

But that, the noun, is the end result. It is not the system that creates invariance. Nor do I think that the system of building up small clues, as described by Jeff Hawkins and implemented to some extent by Numenta, is sufficient to explain intelligence, though it might serve for object identification.

I am even wondering about Hebbian learning, in which transitions in neural systems are achieved by changing weights of neural connections. It is simple to model, but if it isn't what is really going on in the brain (or is only part of what is really going on), assuming it is sufficient could be a block to forward progress.

Maybe I am way off track here. I just read again about how no one could explain all the spectral data accumulated in the 19th century. Then Bohr threw out two assumptions about electrodynamics and added a very simple assumption, that electrons near an atomic nucleus have a minimal energy orbit, and quantum physics finally was off to the races.

On the other hand, sometimes a slow steady program like Numenta's works better than waiting for a breakthrough. I'm giving my neurons the weekend off and going to the German film festival at the Point Arena Theater.

There is a tendency to think of the identification of an object, say a dog or better still, a specific dog, as coinciding in the brain with the firing of a specific neuron, or perhaps a set of neurons. That might in turn fire a pre-verbal response that one could be conscious of, then the actual verbal response, whether as a thought or as speach: "Hugo," my dog.

Some would make this a paradigm for invariance. Hugo can change in position, wear a sweater, age or even die, but the Hugo object is invariant.

But that, the noun, is the end result. It is not the system that creates invariance. Nor do I think that the system of building up small clues, as described by Jeff Hawkins and implemented to some extent by Numenta, is sufficient to explain intelligence, though it might serve for object identification.

I am even wondering about Hebbian learning, in which transitions in neural systems are achieved by changing weights of neural connections. It is simple to model, but if it isn't what is really going on in the brain (or is only part of what is really going on), assuming it is sufficient could be a block to forward progress.

Maybe I am way off track here. I just read again about how no one could explain all the spectral data accumulated in the 19th century. Then Bohr threw out two assumptions about electrodynamics and added a very simple assumption, that electrons near an atomic nucleus have a minimal energy orbit, and quantum physics finally was off to the races.

On the other hand, sometimes a slow steady program like Numenta's works better than waiting for a breakthrough. I'm giving my neurons the weekend off and going to the German film festival at the Point Arena Theater.

Thursday, January 13, 2011

Pointing Choices

Quote of the day:

"This convention is at variance with that used in many expert systems (e.g. MYCIN), where rules point from evidence to hypothesis (e.g., if symptom, then disease), thus denoting a flow of mental inference. By contrast, the arrows in Bayesian networks point from causes to effects, or from conditions to consequences, thus denoting a flow of constraints attributed to the physical world."

Judea Pearl, Probabilistic Reasoning in Intelligent Systems, p. 151

"This convention is at variance with that used in many expert systems (e.g. MYCIN), where rules point from evidence to hypothesis (e.g., if symptom, then disease), thus denoting a flow of mental inference. By contrast, the arrows in Bayesian networks point from causes to effects, or from conditions to consequences, thus denoting a flow of constraints attributed to the physical world."

Judea Pearl, Probabilistic Reasoning in Intelligent Systems, p. 151

Wednesday, January 12, 2011

Eyes Follow Brain Shifting of Attention

In case you missed it:

Human Brain Predicts Visual Attention Before Eyes Even Move

This is a confirmation that one of the principle jobs of the brain is to make predictions, then use the senses to confirm or deny the predictions.

Human Brain Predicts Visual Attention Before Eyes Even Move

This is a confirmation that one of the principle jobs of the brain is to make predictions, then use the senses to confirm or deny the predictions.

Labels:

attention,

brain,

eyes,

predictive memory

Monday, January 3, 2011

Tensor Introduction

Largely as a prelude to my own work, I posted an introduction to tensors at OpenIcon. It may improve as time passes.

Subscribe to:

Comments (Atom)